The Need for Speed: Demand for Low-Latency Streaming Is High

According to Bitmovin’s “Video Developer Report 2019,” latency was a concern of 54% of all its survey participants. Digging into the numbers, subsequent questions revealed that almost 50% of survey participants planned to implement a low-latency technology over the next 1–2 years, with over 50% seeking latency of under 5 seconds and 30% seeking latency of under 1 second (Figure 1).

All of this bodes well for an article on low-latency options, don’t you think? I’ll start with a list of things to know about low-latency technologies, then provide a list of considerations for choosing one.

Figure 1. Latency plans and expectations from Bitmovin’s “Video Developer Report 2019”

WebRTC

Most low-latency solutions use one of three technologies: WebRTC, HTTP Adaptive Streaming (HAS), or WebSockets. Per the WebRTC.org FAQ page, “WebRTC is an open framework for the web that enables Real-Time Communications in the browser.” WebRTC reached the Candidate Recommendation stage in the World Wide Web Consortium (W3C) standards organization but has not been finally approved. Still, according to Wikipedia, WebRTC is supported by all major desktop browsers on Android, iOS, Chrome OS, Firefox OS, Tizen 3.0, and BlackBerry 10. This means that it should run without downloads on any of these platforms.

By design, WebRTC was formulated for browser-to-browser communications. As its name suggests, it is a protocol for delivering live streams to each viewer, either peer to peer or server to peer. In contrast, HAS-based solutions divide the stream into multiple chunks for the client to download and play. As adopted for large-scale streaming, WebRTC is typically the engine for an integrated package that includes the encoder, player, and delivery infrastructure.

Examples of WebRTC-based large-scale streaming solutions include Real Time from Phenix, Limelight Realtime Streaming, and Millicast from CoSMo Software and Influxis. You can also access WebRTC technology in tools like the Wowza Streaming Engine or those from CoSMO Software, although you’d have to create a scalable distribution system for large-scale applications. Latency times for technologies in this class range from 0.5 seconds for 71% of the streams (Real Time) to under 1 second (Limelight Realtime Streaming).

HAS-Based Solutions

There are multiple HAS-based solutions from multiple vendors, although they operate in many different ways. All of them deploy a form of chunked encoding that breaks the typical 2–6-second segment into chunks that can be downloaded without waiting for the rest of the segment to finish encoding. These chunks are shown on the bottom of Figure 2, which was taken from an Akamai blog post by Will Law.

Figure 2. Chunked encoding (from Akamai)

Besides chunked encoding, these systems adjust the manifest file to signal the availability of the chunks, which are pushed to the origin server via HTTP 1.1 chunked-transfer encoding. In terms of expected latency, Law’s post states, “If distribution is happening over the open internet (especially over a last-mile mobile network where rapid throughput fluctuations are the norm), current proofs-of-concept show more sustainable Quality of Experience (QoE) with a glass-to-glass latency in the 3s range, of which 1.5s-2s resides in the player buffer.”

There are many joint-development efforts around these schemas as well as some standards beyond chunked encoding and HTTP 1.1 chunked-transfer encoding, which have long been standards. One cluster of development was around Low-Latency HLS and the hls.js open source player, which includes contributions from Mux, JW Player, AWS Elemental, and MistServer. There’s also a Digital Video Broadcasting (DVB) specification for Low Latency DASH, and there are low-latency guidelines from the DASH Industry Forum. Obviously, each spec only applies to the designated technology, so you have to implement both to deliver low latency to both HTTP Live Streaming (HLS) and DASH clients.

There are also Common Media Application Format (CMAF) chunk-encoded solutions that allow delivery to both HLS and DASH clients from a single set of files. There are many advantages to low-latency CMAF-based approaches, including legacy player support. That is, if the player isn’t capable of low latency, it will simply retrieve and play the segments with normal latency. In addition, since the file format is standards-based, current techniques for DRM, captioning, and advertising insertion should work normally, and the HTTP segments should be cacheable and present no problem for firewalls.

For the most part, standards-based approaches like these provide the most ecosystem flexibility, allowing you to choose an encoder, packager, CDN, and player just like you would for normal latency transmissions.

WebSockets-Based Approaches

The third approach is typically based on a real-time protocol like WebSockets, which creates and maintains a persistent connection between a server and client that either party can use to transmit data. This connection can be used to support both video delivery and other communications, which are convenient if your application needs interactivity.

Like WebRTC implementations, those that use WebSockets are typically offered as a service that includes a player and CDN, and you can use any encoder that can transport streams to the server via RTMP or WebRTC. Examples include Nanocosmos’s NanoStream Cloud and Wowza’s Streaming Cloud With Ultra Low Latency. Wowza claims sub-3-second latency for its solution, while Nanocosmos claims around 1 second, glass to glass.

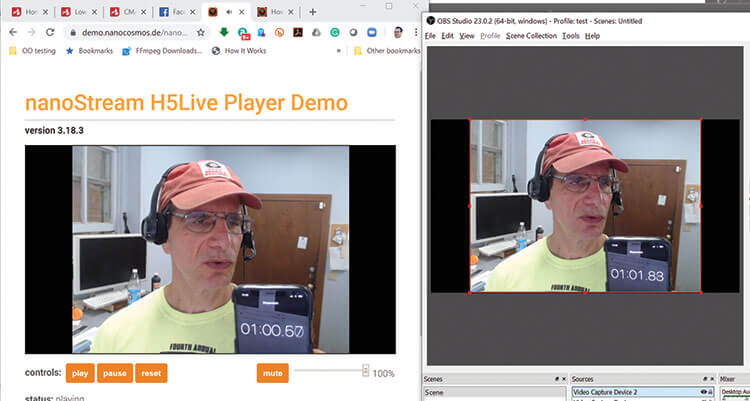

Figure 3 shows a test of the NanoStream cloud in which I’m encoding via OBS on my HP notebook (right window), sending the stream to the Nanocosmos server, and playing it in the H5Live Player. I’m holding up an iPhone with the timer running, and latency is in the 1.3-second range. Note that iPhones don’t natively support WebSockets, so Nanocosmos created a unique Low-Latency HLS protocol to minimize latency.

Figure 3. Nanocosmos’s WebSockets-based service showed sub-1.5-second latency.

Latency Targets Largely Dictate Choice of Technology

With live streaming, there are three factors to balance: quality, latency, and robustness. You can get any two for an event, but you can’t get all three. As an example, the reason out-of-the-box HLS has an approximately 18-second latency is because each segment is 6-seconds long and the Apple default player buffers three segments before starting playback. The benefit is that the viewer will have to suffer a sustained bandwidth event before seeing any degradation. If you cut latency down to 3 seconds via any means, it only takes a short bandwidth blip to stop the stream.

From a technology-selection perspective, there are three levels of latency. The first is “doesn’t matter,” which is a one-to-many presentation with little interaction and no live television. Think of a church service, city council meeting, or even a remote concert. For applications like these, drop your segment size to 2–4 seconds, and you can reduce latency to between about 6–12 seconds with very little risk, no development cost, and minimal testing cost. Lower latency isn’t always better if you don’t absolutely need it.

The second level is “spoiler time,” or the proverbial enthusiast watching TV next door who starts shouting (and tweeting) about a touchdown 30 seconds before you see it. Most broadcast channels average about 5–10 seconds of latency; most of the latency estimates for HTTP-based technologies are in the 2–5-second range, which should comfortably meet this requirement, if not provide streaming with a noticeable advantage over broadcast.

The third level is “real time,” as required by interactive applications like gambling and auctions in which even 2 seconds is too long. At least in the short term, HTTP-based technologies probably can’t deliver this at scale, so you’ll be looking at a WebRTC- or WebSockets-based solution.

If you’re a broadcaster, while 1 second of latency sounds great, WebRTC- and WebSockets-based solutions may have several key limitations. First, you’ll need captions and advertising support, which few services deliver. Second, you may need DRM; although several non-HAS services offer content protection, and forensic watermarking may soon be available, it’s a totally different solution than the CENC-based DRMs used for DASH, HLS, or CMAF. Third, your video quality for a given bitrate may suffer compared to HAS due to certain encoding restraints that may be imposed on some, but not all, WebRTC encoders.

Finally, low-latency HAS services produce content that’s both backward-compatible to players that don’t support low latency and immediately available for DVR or video-on-demand (VOD) delivery. WebRTC- and WebSockets-based systems can make their streams immediately available after the live broadcast for VOD, but not in the traditional adaptive bitrate (ABR) format without transcoding. If you’re broadcasting a single hour a month, the cost to convert the stream for HAS-based VOD (if desired) is negligible; if you’re producing 200 channels of TV each hour, it adds up pretty quickly.

The HAS Market Is Rapidly Evolving

As previously described, there were multiple groups working toward DASH or HLS-based low-latency solutions. Apple managed to surprise them all when it announced its Low-Latency HLS Preliminary Specification, generally called LL-HLS. The spec differs from previous efforts in two key ways. First, it enables transport stream chunks and fragmented MP4 files, whereas DASH only supports the latter (technically, DASH supports transport streams in the spec although it’s seldom, if ever, used). If you use transport stream chunks to deliver low-latency video to HLS, you’ll need a separate stream using fragmented MP4 files to support DASH low-latency output.

Related Articles

In the AV world, zero-frame latency isn't just a pipe dream—it's a requirement. Here's why the streaming industry would do well to pay attention.

18 Nov 2019

DVB will release the first DVB-I specifications at IBC in September, promising low latency as well as the ability to deploy standalone or as a broadcast-OTT hybrid

10 Jul 2019

Fresh funding expected to take MainStreaming's video delivery network into new territories

29 May 2019

M2A Media explains how it orchestrates live streaming worldwide for DAZN, at the Vortech.by conference; Amazon talks AI and the Royal Wedding

07 Mar 2019

Companies and Suppliers Mentioned